| (4 intermediate revisions by the same user not shown) | |||

| Line 120: | Line 120: | ||

== See also == |

== See also == |

||

| + | * [[Automated information retrieval]] |

||

* [[Controlled vocabulary]] |

* [[Controlled vocabulary]] |

||

* [[Cross-language information retrieval]] |

* [[Cross-language information retrieval]] |

||

| Line 125: | Line 126: | ||

* [[Document classification]] |

* [[Document classification]] |

||

* [[Free text search]] |

* [[Free text search]] |

||

| − | * [[ |

+ | * [[Human–computer information retrieval]] |

* [[Information extraction]] |

* [[Information extraction]] |

||

| + | * [[Information behavior]] |

||

* [[Information science]] |

* [[Information science]] |

||

| + | * [[Information seeking]] |

||

* [[Knowledge visualization]] |

* [[Knowledge visualization]] |

||

* [[Search engines]] |

* [[Search engines]] |

||

| + | * [[Secrecy]] |

||

* [[Spoken document retrieval]] |

* [[Spoken document retrieval]] |

||

* [[tf-idf]] |

* [[tf-idf]] |

||

| Line 149: | Line 153: | ||

[[Category:Computer science]] |

[[Category:Computer science]] |

||

[[Category:Information science]] |

[[Category:Information science]] |

||

| − | [[category: |

+ | [[category:Memory]] |

| + | <!-- |

||

[[de:Informationsrückgewinnung]] |

[[de:Informationsrückgewinnung]] |

||

[[es:Recuperación de información]] |

[[es:Recuperación de información]] |

||

[[fr:Recherche d'information]] |

[[fr:Recherche d'information]] |

||

| + | --> |

||

{{enWP|Informatioon_retrieval}} |

{{enWP|Informatioon_retrieval}} |

||

Latest revision as of 19:26, 18 September 2013

Assessment |

Biopsychology |

Comparative |

Cognitive |

Developmental |

Language |

Individual differences |

Personality |

Philosophy |

Social |

Methods |

Statistics |

Clinical |

Educational |

Industrial |

Professional items |

World psychology |

Statistics: Scientific method · Research methods · Experimental design · Undergraduate statistics courses · Statistical tests · Game theory · Decision theory

Information retrieval (IR) is the art and science of searching for information in documents, searching for documents themselves, searching for metadata which describe documents, or searching within databases, whether relational stand alone databases or hypertext networked databases such as the Internet or intranets, for text, sound, images or data. There is a common confusion, however, between data retrieval, document retrieval, information retrieval, and text retrieval, and each of these have their own bodies of literature, theory, praxis and technologies.

The term "information retrieval" was coined by Calvin Mooers in 1948-50.

IR is a broad interdisciplinary field, that draws on many other disciplines. Indeed, because it is so broad, it is normally poorly understood, being approached typically from only one perspective or another. It stands at the junction of many established fields, and draws upon cognitive psychology, information architecture, information design, human information behaviour, linguistics, semiotics, information science, computer science and librarianship.

Automated information retrieval (IR) systems were originally used to manage information explosion in scientific literature in the last few decades. Many universities and public libraries use IR systems to provide access to books, journals, and other documents. IR systems are often related to object and query. Queries are formal statements of information needs that are put to an IR system by the user. An object is an entity which keeps or stores information in a database. User queries are matched to documents stored in a database. A document is, therefore, a data object. Often the documents themselves are not kept or stored directly in the IR system, but are instead represented in the system by document surrogates.

In 1992 the Department of Defense, along with the National Institute of Standards and Technology (NIST), cosponsored the Text Retrieval Conference (TREC) as part of the TIPSTER text program. The aim of this was to look into the information retrieval community by supplying the infrastructure that was needed for such a huge evaluation of text retrieval methodologies.

Web search engines such as Google and Lycos are amongst the most visible applications of information retrieval research.

Performance measures

There are various ways to measure how well the retrieved information matches the intended information:

Precision

The proportion of relevant documents to all the documents retrieved:

- P = (number of relevant documents retrieved) / (number of documents retrieved)

In binary classification, precision is analogous to positive predictive value. Precision can also be evaluated at a given cut-off rank, denoted P@n, instead of all retrieved documents.

Recall

The proportion of relevant documents that are retrieved, out of all relevant documents available:

- R = (number of relevant documents retrieved) / (number of relevant documents)

In binary classification, recall is called sensitivity.

F-measure

The weighted harmonic mean of precision and recall, the traditional F-measure is:

This is also known as the measure, because recall and precision are evenly weighted.

The general formula is:

Two other commonly used F measures are the measure, which weights precision twice as much as recall, and the measure, which weights recall twice as much as precision.

Mean average precision

Over a set of queries, find the mean of the average precisions, where Average Precision is the average of the precision after each relevant document is retrieved.

Where r is the rank, N the number retrieved, rel() a binary function on the relevance of a given rank, and P() precision at a given cut-off rank:

This method emphasizes returning more relevant documents earlier.

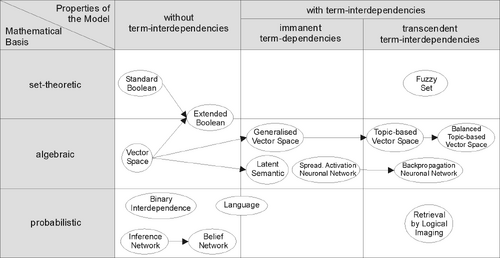

Model types

classification of IR-models

For a successful IR, it is necessary to represent the documents in some way. There are a number of models for this purpose roughly dividable into three main groups:

Set-theoretic / Boolean models

- Standard Boolean model

- Extended Boolean model

- fuzzy retrieval

Algebraic / vector space models

- Vector space model

- Generalized vector space model

- Topic-based vector space model

- Enhanced topic-based vector space model

- Latent semantic indexing aka latent semantic analysis

Probabilistic models

- Binary independence retrieval

- Uncertain inference

- Language models

- Divergence from randomness models

Open source information retrieval systems

- GalaTex XQuery Full-Text Search (XML query text search)

- ht://dig Open source web crawling software

- iHOP Information retrieval system for the biomedical domain

- Information Storage and Retrieval Using Mumps(Online GPL Text)

- Lemur Language Modelling IR Toolkit

- Lucene Apache Jakarta project

- MG full-text retrieval system Now maintained by the Greenstone Digital Library Software Project

- SMART Early IR engine from Cornell University

- Terrier Information Retrieval Platform

- Wumpus multi-user information retrieval system

- Xapian Open source IR platform based on Muscat

- Zettair

Major information retrieval research groups

- Glasgow Information Retrieval Group

- Center for Intelligent Information Retrieval

- IIT Information Retrieval Lab

- Information Retrieval at Microsoft Research Cambridge

- CIR Centre for Information Retrieval

- PSU Intelligent Systems Research Laboratory

Major figures in information retrieval

- Calvin Mooers

- Eugene Garfield

- Gerard Salton

- W. Bruce Croft

- Karen Spärck Jones

- C. J. van Rijsbergen

- Stephen E. Robertson

- S. Dominich

Awards in this field: Tony Kent Strix award

ACM SIGIR Gerard Salton Award

- 1983 - Gerard Salton, Cornell University

- "About the future of automatic information retrieval"

- 1988 - Karen Sparck Jones, University of Cambridge

- "A look back and a look forward"

- 1991 - Cyril Cleverdon, Cranfield Institute of Technology

- "The significance of the Cranfield tests on index languages"

- 1994 - William S. Cooper, University of California, Berkeley

- "The formalism of probability theory in IR: a foundation or an encumbrance?"

- 1997 - Tefko Saracevic, Rutgers University

- "Users lost: reflections on the past, future, and limits of information science"

- 2000 - Stephen E. Robertson, City University London

- "On theoretical argument in information retrieval"

- 2003 - W. Bruce Croft, University of Massachusetts, Amherst

- "Information retrieval and computer science: an evolving relationship"

See also

- Automated information retrieval

- Controlled vocabulary

- Cross-language information retrieval

- Digital libraries

- Document classification

- Free text search

- Human–computer information retrieval

- Information extraction

- Information behavior

- Information science

- Information seeking

- Knowledge visualization

- Search engines

- Secrecy

- Spoken document retrieval

- tf-idf

External links

- ACM SIGIR: Information Retrieval Special Interest Group

- BCS IRSG: British Computer Society - Information Retrieval Specialist Group

- The Anatomy of a Large-Scale Hypertextual Web Search Engine

- Text Retrieval Conference (TREC)

- Information Retrieval (online book) by C. J. van Rijsbergen

- International Conference on Image and Video retrieval, July 21-23, 2004

- Glasgow Information Retrieval Group Wiki

- An introduction to IR

- Innovations in Search Conference, September 27-29, 2005

- Measuring Search Effectiveness

- Resources for Text, Speech and Language Processing

- Stanford CS276 course - Information Retrieval and Web Mining

| This page uses Creative Commons Licensed content from Wikipedia (view authors). |